Future Work

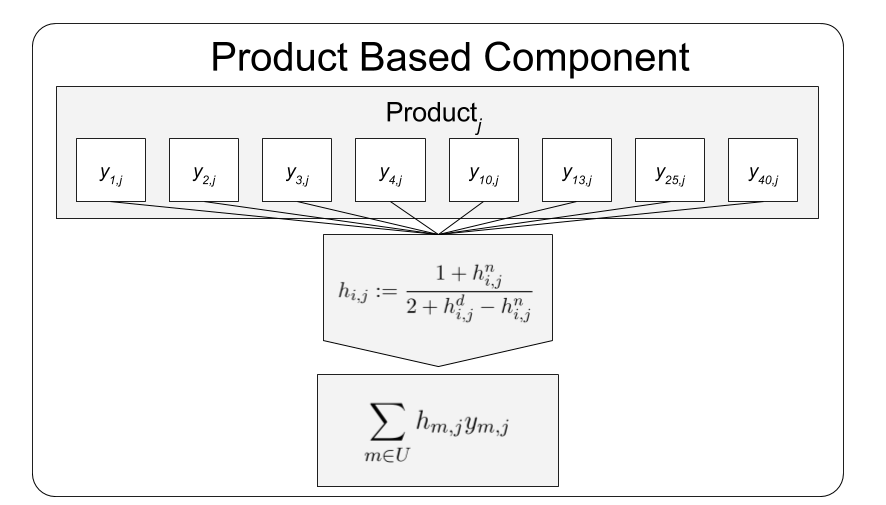

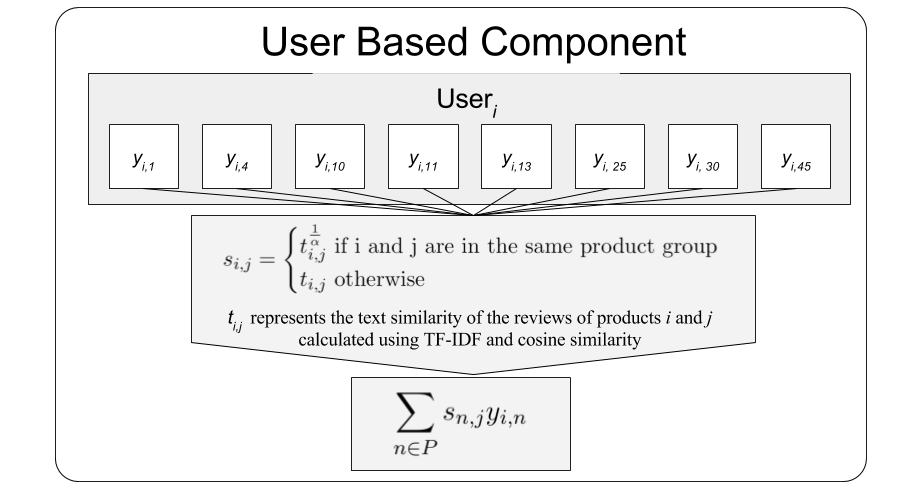

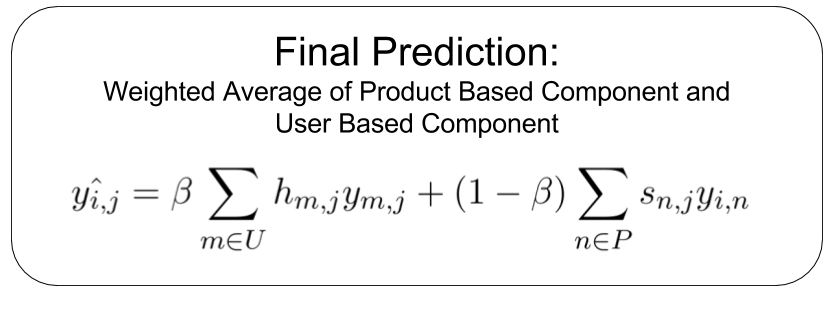

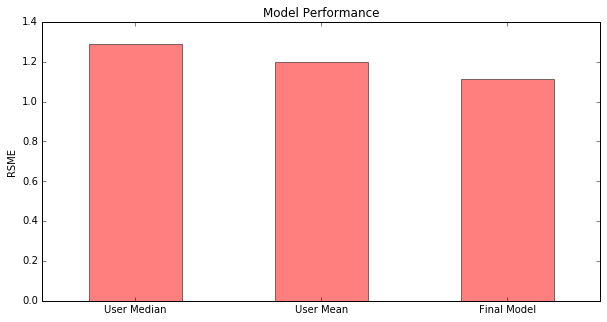

Though we beat our baseline models, our model only performs marginally better than a raw weighting of product mean and user mean. It appears that the similarity matrix was not an effective tool in our model. Furthermore, it suggests that user preferences, as far as we can tell, are not as individual as we believed, simply determining the benchmark the user reviews based on.

Furthermore, due to computational limitations, we were limited in the amount of products and reviews that we could predict on. Given the funding and computation time, it might be worth rerunning the entire computation process on the complete dataset.

Additionally, more features on users and products would give more information to train the model's components. This may be difficult given that Amazon’s API does not have an easy feature generation process for items and finding individual users and collecting more reviews is difficult due to privacy concerns. However, doing so may increase the amount of data to train on as well as improve the similarity matrix calculation process.

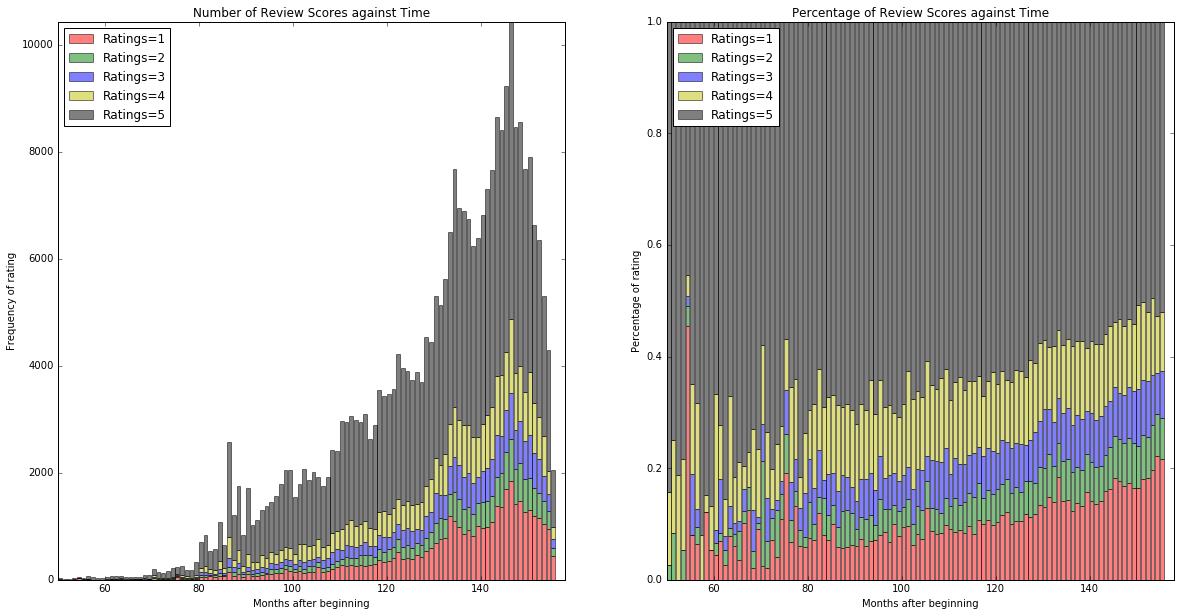

Finally, in our model, we currently disregard the timestamps of reviews. As shown in the Data Exploration section, we noticed a time drift in scores, but it is unclear what sort of predictive power or interpretation this feature would have in a more complicated final model, but it may be useful in normalize user ratings over time when calculating the product based components.

Click the right arrow to see our references.